This post was most recently updated on April 29th, 2022.

2 min read.This article explains another simple fix to an annoying issue. I guess that’s how I start most of my little tutorials, but hey, it’s true! I suppose I just have a knack for running into issues that come with poorly documented fixes or workarounds that are obvious but only in hindsight… Right?

Anyway, this time my WebSocket requests were not being handled as such by my ASP.NET Controllers. “IsWebSocketRequest” was suggested by IntelliSense, but it would always be null in the code. What gives?

Problem

So I was developing a new API that was supposed to accept a WebSocket connection and do something fun with it. My code looked something like this:

if (HttpContext.WebSockets.IsWebSocketRequest)

{

// All of the cool stuff happens here, but we never got to this part!

}

else

{

// We'd always end up here

}The JavaScript code I was using to test the connection was somewhat like this:

let webSocket = new WebSocket('wss://localhost/ws');This would already throw an error – and when debugged, I noticed my HttpContext.WebSockets.IsWebSocketRequest would always be false!

Well – that’s unexpected. What gives?

Solution

The solution to this problem was, as usual, simple and a little bit unintuitive.

In the following guide, I will explain in roughly 1 and a half steps, how you can make make your .NET Web API (or whatever ASP.NET -based stuff you’re developing) properly recognize your WebSocket connections!

Time needed: 5 minutes

How to fix HttpContext.WebSockets.IsWebSocketRequest always being null?

- Make sure you have UseWebSockets()

Is this obvious? I guess it should be, but still, – better document it!

The project hosting your controllers needs to be configured to accept WebSocket connections. This can be done in the Startup.cs by simply adding this:app.UseWebSockets();

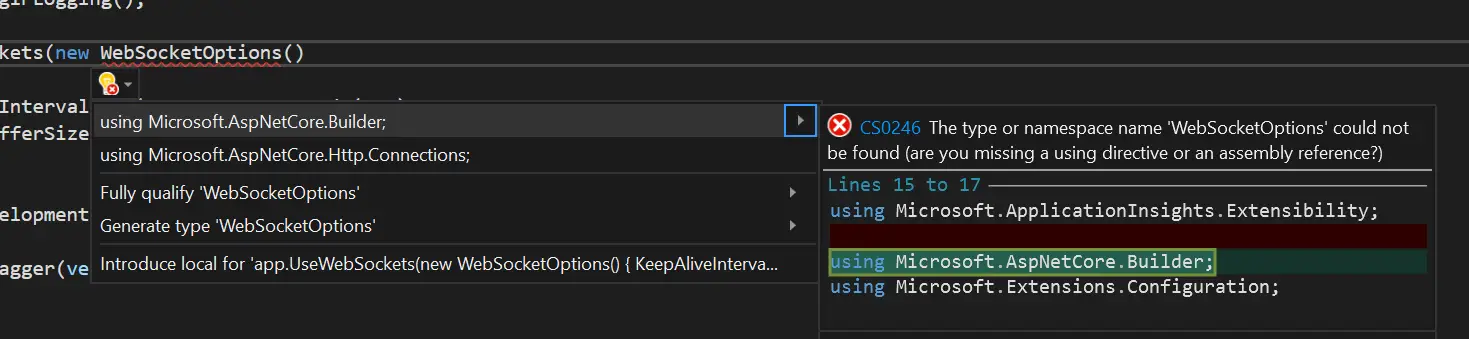

You won’t typically even need additional dependencies, as UseWebSockets() comes from Microsoft.AspNetCore directly. If you want to add configuration options using WebSocketOptions, you’ll need to add the following using statement:using Microsoft.AspNetCore.Builder;

As shown below:

- Make sure your UseWebSockets() is in the right place

If you’re like me, you were smart enough to add the UseWebSockets() call, but dumb enough to add it at the wrong place.

This needs to occur before app.UseEndpoints()!

So, your Startup.cs should look something like this:// you'll probably have these first:

app.UseRouting();

app.UseCors("CorsPolicy");

app.UseAuthentication();

app.UseAuthorization();

// now's a good time for WebSockets:

app.UseWebSockets();

// most other stuff here

// and finally:

app.UseEndpoints()

Yes, this was the “half step”. Or maybe it was the first one. Still – should be pretty simple overall! - Rebuild and run!

Yep – with that, you should be good!

…. and there you go! This guide is valid for at least .NET Core 3.1.

Did it help? Was it utterly useless? Did you find another solution? Let me know in the comments below!

- CSOM suddenly throwing exceptions when trying to access list contents in SharePoint? A weird fix. - April 2, 2024

- “Predefined type ‘System.Object’ is not defined or imported” and other System namespace stuff missing in your solution? - March 26, 2024

- How to import GraphQL schema to Postman in Windows? - March 19, 2024