This post was most recently updated on April 23rd, 2025.

4 min read.Another (possibly?) timely and weird one here! This article explains how to work around Azure DevOps suddenly stopping to play nice with Docker and the rest of our tooling and causing all builds to fail with oddly non-descript error messages.

Annoying to deal with but not the end of the world as we’ll see!

Background

Another odd one for the books! No idea what caused it, but suddenly our pipelines started looking like this, with no clear reason (I mean, we break builds all the time… But all builds at once?)

Something smells fishy here…

Problem

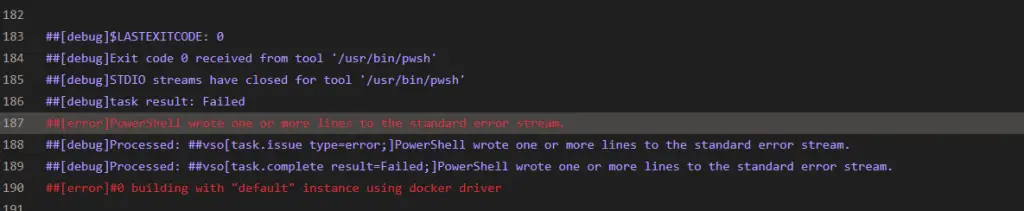

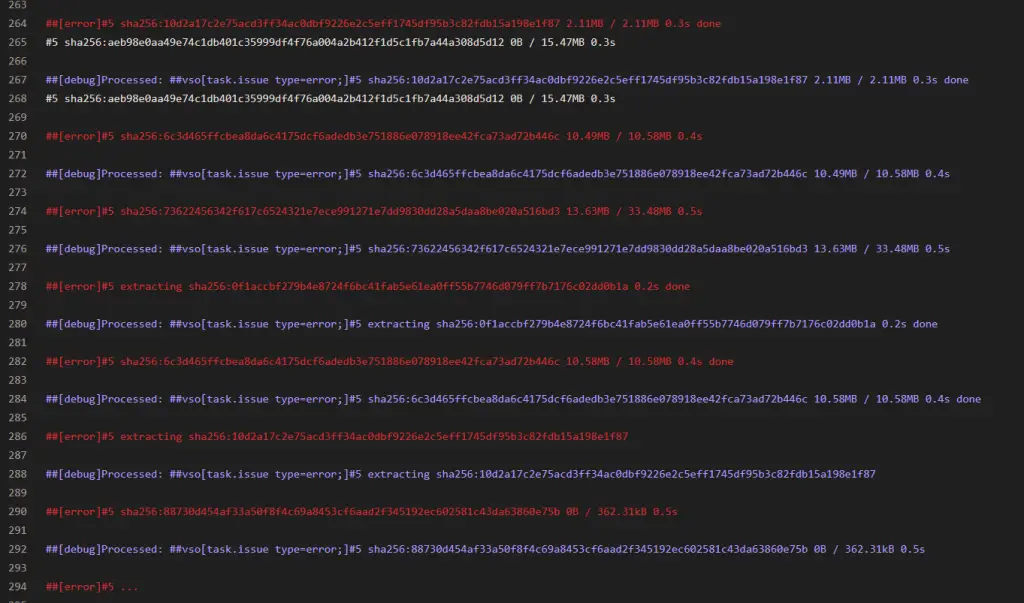

I decided to take a closer look – I quickly enabled debug logging and jumped in to see what causes the builds to fail.

Oddly, the “failing” lines started with this:

Below in text format:

##[debug]$LASTEXITCODE: 0

##[debug]Exit code 0 received from tool '/usr/bin/pwsh'

##[debug]STDIO streams have closed for tool '/usr/bin/pwsh'

##[debug]task result: Failed

##[error]PowerShell wrote one or more lines to the standard error stream.

##[debug]Processed: ##vso[task.issue type=error;]PowerShell wrote one or more lines to the standard error stream.

##[debug]Processed: ##vso[task.complete result=Failed;]PowerShell wrote one or more lines to the standard error stream.

##[error]#0 building with "default" instance using docker driverSo the error code was 0, and the first “red line” (highlighting an error) in the whole log was “PowerShell wrote one or more lines to the standard error stream.”

But.. That’s not true, is it?

And it gets weirded from there. Every line of output seems to be marked as errorenous!

So, really, the task has 0 as the $LASTEXITCODE, it is and 0 as the exit code, STDIO streams are closed and there’s no visible errors. And the best clue we had was the following line:

PowerShell wrote one or more lines to the standard error stream.What do?

Reason

I’d love to know what suddenly caused the pipeline to start failing – this only started a few days ago (or a few weeks before the time of publishing if everything goes the way I’ve planned), but Microsoft does constantly update the agent images, so who knows if the issue still exists by then :)

Solution

Let’s go through the things I took to fix this (maybe they’ll help you too!)

Time needed: 30 minutes

How to fix an Azure DevOps pipeline failing due to erroneous errors?

- Easy fix: Ignore STDERR

A could starting point is to make sure you’re NOT failing on STDERR output. I mean, sometimes you might want to – but I’d guess most times you don’t.

You should know best – but take a look at the reference task below:- task: PowerShell@2

displayName: Build images

inputs:

targetType: inline

pwsh: true

failOnStderr: false # this will make your task ignore stderr output if it was successful otherwise (but it should be false by default)

script: |

# your docker stuff here

Give it a go if you had it on “true”. - Dumb fix: Ignore task failing

You could always decide to ignore any errors a task might throw. In order to achieve that, do this in your task configuration:

- task: PowerShell@2

displayName: Build images

inputs:

targetType: inline

pwsh: true

continueOnError: true # this will make your task ignore all errors and continue anyway

script: |

# your docker stuff here

This is not pretty, but it’ll make your task ignore the errors. A lot of times, this is not what you want, as it’ll ignore a lot of meaningful and actually critical errors too! - Prettier fix: redirect STDERR to STDOUT

A little bit better fix (or maybe it’s really just a workaround) is to redirect your STDERR stream – that for whatever faulty reason gets input that will fail your task.

Just another thing to try, right? Consider below:- task: PowerShell@2

displayName: Build images

inputs:

targetType: inline

pwsh: true

script: |

yourBuildTool.exe "yourargument" 2>&1

Or even:- task: PowerShell@2

displayName: Build images

inputs:

targetType: inline

pwsh: true

script: |

docker build -t my-image 2>&1

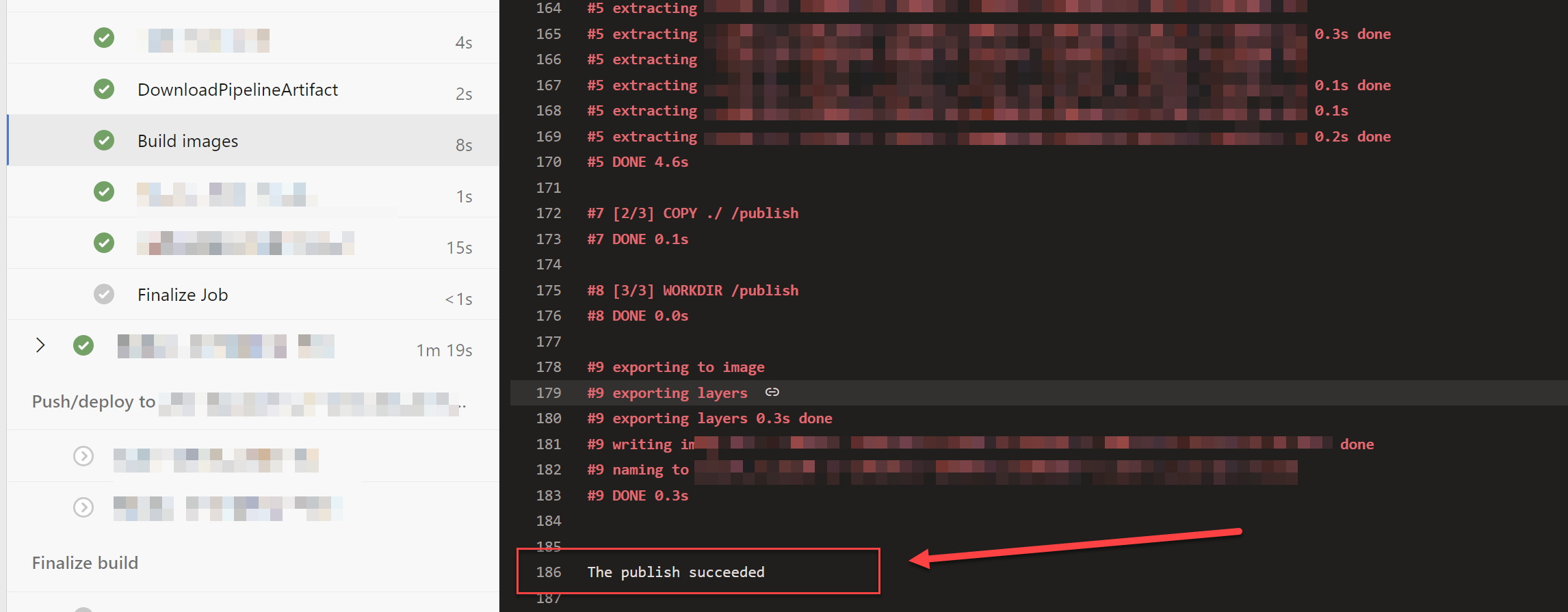

These samples will instruct your terminal – in my case, PowerShell (Core) – to redirect STDERR to STDOUT. This’ll make sure you won’t get output to the error stream. But what you will get, is all of the output in the logs – and it looks a bit… Wonky.

Why? Well, see below:

“Build images” was successful. With plenty of red lines in the log. But who cares, right? - Actual fix: Who the hell knows?

So with either of the above you should have been able to fix the issue. I sure was. Granted, neither of the fixes actually addresses the underlying issue: Docker commands in Azure DevOps are, for whatever reason, outputting to STDERR in our pipelines, even though no errors occurred.

Eh, when I figure out why this happens (could be Azure DevOps build agents, Docker, an odd config in the pipeline… Or our underlying tooling!) I’ll update it in the post.

Alright – so that’s that for today. Another odd one for the books.

References

- Any kubectl command throws “Unhandled Error” err=”couldn’t get current server API group list: Get \”http://localhost:8080/api?timeout=32s\”: dial tcp [::1]:8080: connect: connection refused” – what do? - July 1, 2025

- “Sync error. We are having trouble syncing. Click ‘Sign in again’ to fix the issue.” in OneNote? Let’s fix it! - June 24, 2025

- How to set Outlook as Your default email client on a mac (because that’s definitely a totally sensible thing to do) - June 17, 2025